Kubernetes is a continuously evolving technology strongly supported by the open source community. In the last What’s new in Kubernetes 1.25, we mentioned the latest features that have been integrated. Among these, one may have great potential in future containerized environments because it can provide interesting forensics capabilities and container checkpointing.

Indeed, the Container Checkpointing feature allows you to checkpoint a running container. This means that you can save the current container state in order to potentially resume it later in the future, without losing any information about the running processes or the data stored in it. It has been already integrated with several container engines, but has just become available and only as an alpha feature in Kubernetes.

In this article, we will learn more about this new functionality, how it works, and why it can become very useful in forensics analysis.

Stateless vs. stateful

Before delving into the details of this new functionality, let’s recall one of the oldest debates on containerization: statelessness vs. statefulness. Also, even before that, let’s clarify what a “state” is for containers.

A container’s state can be considered as the whole set of resources that regards the container’s execution: processes running, internal operations, open file descriptors, data accessed by the container itself, container’s interactions, and much more.

Many advocates of the stateless cause affirm that containers, once created, do their job and then should disappear, sometimes also leaving the floor to other containers. By the way, this approach is far from reality, and this is mainly because most of the applications that run today in containers were not originally conceived with a containerized approach. Indeed, many are just legacy applications that have been containerized and still rely on the application state.

So, stateful containers are still in use and this also implies that keeping, storing, and resuming a container’s state with the container checkpointing feature can be useful.

Let’s consider some use cases that may best represent this functionality’s benefits to containerized environments:

- Containers that take a long time to start (sometimes also several minutes). By storing the state of the container once it has been started, the application can be launched already running, skipping the cold-start phase.

- One of your containers is not properly working, probably because it has been compromised. Moving your container to a sandbox environment without losing information to triage what the impact of the incident may be and its cause will surely prevent future problems.

- Migration process of your running containers. You may want to move containers from one system to another, without losing their current state.

- Update or restart your host. Maintaining containers without losing the state may be a requirement.

All of these scenarios can be addressed by container checkpoint and restore capabilities.

Container Checkpoint/Restore

Checkpointing the container state allows you to freeze and take a snapshot of the container executions, resources, and details, creating a container backup and writing it to disk. This, once resumed, will continue its job without even knowing it has been stopped and, for example, moved into another host.

All of this was made possible with the help of the CRIU project (Checkpoint/Restore In Userspace) that, as the name suggests, is involved in checkpoint/restore functionalities for Linux.

Extending CRIU capabilities to containers, however, did not begin with the latest Kubernetes release. Checkpointing/restoring support was first introduced into many low-level interfaces, from container runtime (e.g., runc, crun) to container engine (e.g., CRI-O, containerd), until arriving at the Kubelet layer.

If we go to the cloud, we have something similar with AWS snapshots, for example. But these do not perform a full copy of the hot state, just cold, so this feature provides more information if required for forensic analysis. In order to deeply understand the details of this feature, let’s take a look at it from different perspectives.

Kubernetes container checkpoint

The minimal checkpointing support PR was merged into Kubernetes v1.25.0 as an alpha feature after almost two years from the proposal of the Container Checkpointing KEP (Kubernetes Enhancement Proposal). This KEP had the specific goal of checkpoint containers (and NOT pods), extending this capability to the Kubelet API. In parallel, this PR was also supported by some changes implemented in container runtime interfaces (CRI) and container engine interfaces over time.

Regarding the restoring feature, this has not been integrated into Kubernetes yet, and its support is currently provided only at container engine level. This was done to simplify the new feature’s PR and the changes it made to the API as much as possible.

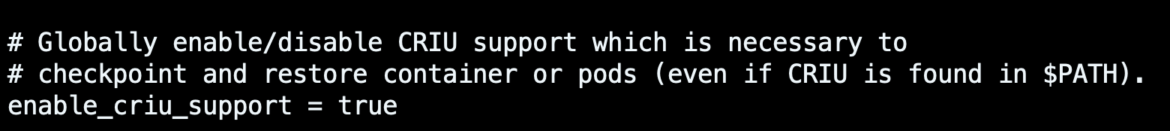

In order to use the checkpointing feature in Kubernetes, the underlying CRI implementation must support that capability. The latest CRI-O v1.25.0 release, thanks to this PR, has recently become able to do it, but you must first set the enable_criu_support field in the CRI-O configuration file to true.

Since this is an alpha feature, it is also required to enable the feature gate ContainerCheckpoint in the Kubernetes cluster. Here is the kubelet configuration of a cluster spawned with the kubeadm utility:

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=unix:///var/run/crio/crio.sock --pod-infra-container-image=registry.k8s.io/pause:3.8 --feature-gates=ContainerCheckpoint=True"Code language: Bash (bash)

Once this is done, in order to trigger the container checkpoint you must directly involve the kubelet endpoint within the Kubernetes cluster. So, for doing this, you can change the kubelet API access restrictions in the node where the container that you want to checkpoint is scheduled. To do this, you can set --anonymous-auth=true and --authorization-mode=AlwaysAllow,otherwise you can also authorize and authenticate your communication with the Kubelet endpoint.

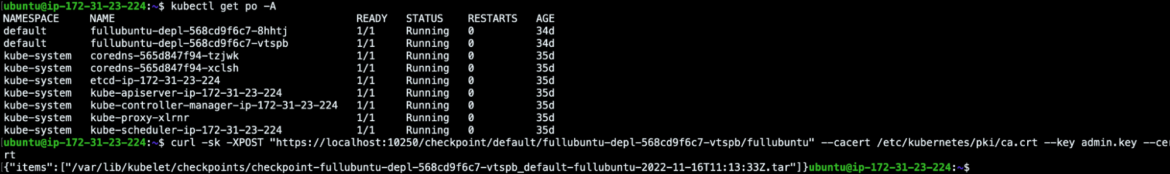

For example, this authorized POST request will checkpoint the container fullubuntu from the fullubuntu-depl-568cd9f6c7-vtspb pod, leveraging the proper cluster certificates and keys:

ubuntu@ip-172-31-23-224:~$ curl -sk -XPOST "https://localhost:10250/checkpoint/default/fullubuntu-depl-568cd9f6c7-vtspb/fullubuntu" --cacert /etc/kubernetes/pki/ca.crt --key admin.key --cert admin.crt

{"items":["/var/lib/kubelet/checkpoints/checkpoint-fullubuntu-depl-568cd9f6c7-vtspb_default-fullubuntu-2022-11-16T11:13:33Z.tar"]}Code language: Bash (bash)

The result of this POST returns the checkpoint that has been created in the default path /var/lib/kubelet/checkpoints/.

ubuntu@ip-172-31-23-224:~$ sudo ls -al /var/lib/kubelet/checkpoints/

total 252

drwxr-xr-x 2 root root 4096 Nov 16 11:13 .

drwx------ 9 root root 4096 Nov 16 11:13 ..

-rw------- 1 root root 247296 Nov 16 11:13 checkpoint-fullubuntu-depl-568cd9f6c7-vtspb_default-fullubuntu-2022-11-16T11:13:33Z.tarCode language: Bash (bash)What we did can be outlined with the following steps:

- You talk to the Kubelet API via POST request, asking to checkpoint a pod’s container.

- Kubelet will trigger the container engine (CRI-O in our case).

- CRI-O will talk to the container runtime (crun or runc).

- Container runtime talks to CRIU that is in charge of checkpointing the container and its processes.

- CRIU does the checkpoint, writing it to disk.

Now that the container checkpoint is created as an archive, it is potentially possible to restore it. But at the time of writing, the checkpointed container can be restored only outside of Kubernetes, at container engine level for example.

This evidence and what was mentioned above underscore the fact that this new feature still has some limitations. However, it cannot be excluded that future enhancements will make it possible to not only checkpoint containers, but also restore them directly in Kubernetes and extend the checkpointing/restoring capabilities to pods. That said, this last topic can be even more complex to address, because there might be the need to freeze and checkpoint all the containers within the pod, at the same time, also taking care of the dependencies that may exist between containers of the same pod.

Moreover, it is worth mentioning that this Kubernetes functionality in the future could attract a great deal of interest from the community. Indeed, it may come in handy to speed up the startup of all those containers that take a long time to start and are subject to high auto-scaling operations in Kubernetes.

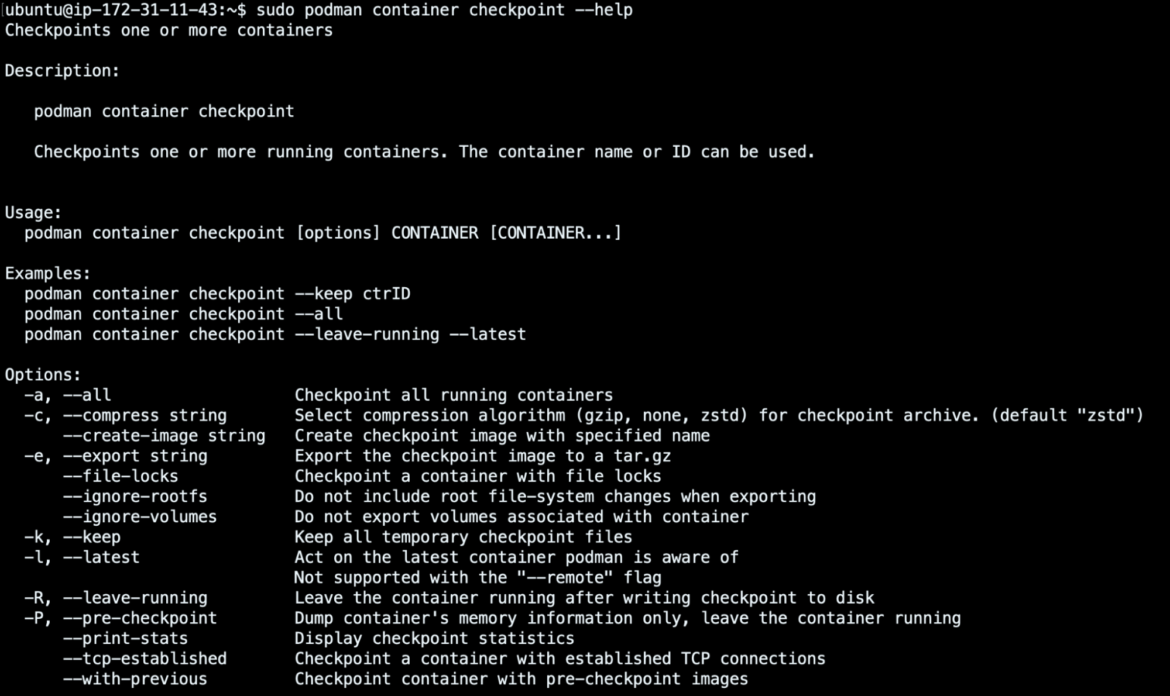

Podman checkpoint feature

Podman introduced support for checkpointing/restoring functionalities in 2018, and is perhaps one of the container engines that best integrates both. Indeed, these features have been rolled in successfully in the latest Podman releases and are also provided with advanced properties.

For example, in Podman you are allowed not only to write checkpointed containers to the disk as archives that can be compressed with different formats, but also as local images ready to be pushed into your preferred container registry. Moreover, the restore functionality is capable of restoring the previously checkpointed images, both coming from other machines or from a container registry where the checkpointed image was previously pushed.

In order to show such interesting features in depth, let’s take a look at this quick container forensic use case.

Suppose that your tomcat image is running in your machine and is exposed outside, listening on port 8080.

ubuntu@ip-172-31-11-43:~$ sudo podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

05b43a30c650 docker.io/<your_repo>/tomcat:latest catalina.sh run 6 minutes ago Up 6 minutes ago 0.0.0.0:8080->8080/tcp tomcatCode language: Perl (perl)You have received abnormal alerts from a runtime security solution (we use Falco, the CNCF incubated project that is able to detect anomalies), and you want to take a look inside it. So you open a shell into this tomcat container, and you see the following processes running:

ubuntu@ip-172-31-11-43:~$ sudo podman exec -it tomcat /bin/bash

root@05b43a30c650:/usr/local/tomcat# ps -aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 1.3 2.0 3586324 84304 pts/0 Ssl+ 10:51 0:02 /opt/java/openjdk/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties -Djava.uti

root 1507 0.0 0.4 710096 17424 ? Sl 10:52 0:00 /etc/kinsing

root 1670 0.0 0.0 0 0 ? Z 10:53 0:00 [pkill] <defunct>

root 1672 0.0 0.0 0 0 ? Z 10:54 0:00 [pkill] <defunct>

root 1678 0.0 0.0 0 0 ? Z 10:54 0:00 [kdevtmpfsi] <defunct>

root 1679 186 30.7 2585864 1233308 ? Ssl 10:54 0:22 /tmp/kdevtmpfsi

root 1690 0.0 0.0 7632 3976 pts/1 Ss 10:54 0:00 /bin/bash

root 1693 0.0 0.0 10068 1564 pts/1 R+ 10:54 0:00 ps -auxCode language: Bash (bash)It seems that your container was compromised and the running processes suggest that the container launched Kinsing, a malware that we already covered in this article!

In order to investigate the container execution, you may want to freeze the container state and store it locally. This will give you the opportunity to resume its execution later, even in an isolated environment if you prefer.

To do this, you can checkpoint the affected container, using Podman and its container checkpoint command.

To checkpoint the tomcat container writing its state to disk, also keeping track of the established TCP connections, you can launch the following command:

ubuntu@ip-172-31-11-43:~$ sudo podman container checkpoint --export tomcat-checkpoint.tar.gz --tcp-established tomcat

ubuntu@ip-172-31-11-43:~$ ls | grep checkpoint

tomcat-checkpoint.tar.gzCode language: Bash (bash)Once checkpointed without the --leave-running option, the container won’t be in the running state anymore.

ubuntu@ip-172-31-11-43:~$ sudo podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESCode language: Perl (perl)At this point, to restore the container execution, you can resume its state from the stored archive that you have previously written. Launching the container restore command, the container will run again, exposed on the same port, and with the same container ID.

Opening a shell into the container, you can also see the same processes as before, with the same PIDs, as if they had never been interrupted.

ubuntu@ip-172-31-11-43:~$ sudo podman container restore -i tomcat-checkpoint.tar.gz --tcp-established

05b43a30c650e3e22b99d63d328bb9f7b5cb2224cf54ce39aab20382ae95813e

ubuntu@ip-172-31-11-43:~$ sudo podman ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

05b43a30c650 docker.io/<your_repo>/tomcat:latest catalina.sh run About a minute ago Up About a minute ago 0.0.0.0:8080->8080/tcp tomcat

ubuntu@ip-172-31-11-43:~$ sudo podman exec -it tomcat /bin/bash

root@05b43a30c650:/usr/local/tomcat# ps -aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.3 1.5 3586324 61420 pts/0 Ssl+ 11:49 0:00 /opt/java/openjdk/bin/java -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties -Djava.uti

root 42 0.0 0.1 7632 4032 pts/1 Ss 11:50 0:00 /bin/bash

root 46 0.0 0.0 10068 3324 pts/1 R+ 11:50 0:00 ps -aux

root 1507 0.0 0.3 710352 14420 ? Sl 11:49 0:00 /etc/kinsing

root 1670 0.0 0.0 0 0 ? Z 11:49 0:00 [pkill] <defunct>

root 1672 0.0 0.0 0 0 ? Z 11:49 0:00 [pkill] <defunct>

root 1678 0.0 0.0 0 0 ? Z 11:49 0:00 [kdevtmpfsi] <defunct>

root 1679 71.8 59.7 2789048 2397280 ? Ssl 11:49 0:40 /tmp/kdevtmpfsiCode language: Bash (bash)In this case, the restore operation was done by leveraging the written archive on the same machine where the container was breached and checkpointed. However, what usually happens during forensic analysis is such execution could also have taken place in another sandboxed and isolated environment, such as after transferring the checkpoint archive via scp protocol.

Another alternative would have been to use the --create-image option to checkpoint the container as a local image, instead of writing the container checkpoint as an archive with the --export option.

ubuntu@ip-172-31-11-43:~$ sudo podman container checkpoint --create-image tomcat-check --tcp-established tomcat

tomcat

ubuntu@ip-172-31-11-43:~$ sudo podman images

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/tomcat-check latest a537f64cf3d1 21 minutes ago 2.54 GB

docker.io/<your_repo>/tomcat latest 1ca69d1bf49a 6 days ago 480 MBCode language: Bash (bash)By taking this other approach, you are allowed to restore your container locally from the newly created image. Or, if you want to do it elsewhere, tag and push the image in a container registry, pull it from another machine that supports the checkpoint/restore capabilities, and then restore it.

CRIU implements checkpoint/restore functionality for Linux

CRIU is the main utility involved in checkpointing/restoring tasks in Linux.

With CRIU, it is possible to freeze an application by writing its state in files to disk. Starting from those files that have been written, you can later restore the application from the time it was frozen. The advantage is that the application will never know it has been stopped and later resumed.

The interaction between CRIU and container interfaces made it possible to extend checkpointing and restoring capabilities from applications to containers as well.

Indeed, the latest CRIU releases provide an up-to-date checkpointing/restoring integration to all the other open source projects at container runtime and container engine levels. Among these, runc was the first one that integrated support for CRIU, and consequently, for checkpointing/restoring features. That said, to provide such functionalities, most of the container runtime projects come with the CRIU dependency already installed.

But how do checkpointing and restoring work in detail?

The checkpointing phase can be summarized as follows:

- CRIU pauses a whole process tree using ptrace(). It means that starting from a specific PID, CRIU will pause it (and all of its children).

- Once all the processes have been stopped, CRIU collects all of their information from the userspace. So, it gathers process information from outside the processes, reading data from /proc/<PID>/*, and from within the processes, using the parasite code injection technique. In such a way, the CRIU binaries will interact with the inside of the process to get a deeper view of it, and will be able to see what the processes themselves can see.

- Once the needed information has been collected and written to disk, the parasite code is removed and the processes will stop or continue their execution.

Instead, the restoring phase must reconstruct the entire process tree. To do this, the following steps are taken:

- CRIU clones each PID/TID via clone, clone3 syscalls.

- CRIU moves the processes into the original ones like they were during checkpointing. This means that PIDs, file descriptors, memory pages, and everything else that was related to the original processes will be the same as during the checkpoint.

- At the end, security settings will be loaded as well. So, seccomp, SELinux, AppArmor labels will be applied as originally.

- Finally, CRIU will jump into the process to be restored and will continue its execution, without even knowing it was paused, checkpointed, and resumed.

Benefits of container checkpointing/restoring

Container checkpointing/restoring features can bring many advantages to containerized environments, as reported above and explained by the use cases. By the way, there are also other considerations that need to be mentioned regarding the performance and the impact of these functionalities.

- Container cold-start phase sometimes requires a lot of seconds (minutes in some more complex scenarios). Reading directly the container checkpoint from memory can be faster than creating and starting the container again. This can save you a lot of time, especially if your container can be subject to high auto-scaling operations.

- The checkpoint will include everything that is inside the container. At the time of this writing, any attached external devices, mounts, or volumes are excluded from the checkpoint.

- The bigger the size of the memory pages in the checkpointed container, the higher the disk usage. Each file that has been changed compared to the original version will be checkpointed. This means that disk usage will increase by the used container memory and changed files.

- Writing container memory pages to disk can result in increased disk IO operations during checkpoint creation, and consequently lower performance. Moreover, the checkpoint duration will heavily depend on the number of memory pages of the running container processes.

- The created checkpoint contains all the memory pages and thus potentially also all those secrets that were expected to be in memory only. This implies that it becomes crucial to pay attention to where the checkpoint archives are stored.

- Checkpoint/restore failures could be difficult to debug due to issues in several layers of the technological stack, starting from Kubelet until CRIU.

Conclusion and references

The open source ecosystem is continuously evolving and may bring many new capabilities to the containerized world. Container checkpointing/restoring is among these. They can become very useful in the near future and can provide some new functionalities to perform forensics analysis, speed up the container cold start phase, or allow smoother container migration.

If you are dealing with stateful apps, such features can improve container management. On the other hand, these capabilities are antithetical to the stateless paradigm. As a result, checkpointing stateless containers is useless.

That said, before adopting such solutions in their environments, users should be aware of the current limitations of container checkpointing and restoring.

If you are curious to learn more about this topic, we strongly suggest you take a look at these to pull requests in GitHub and documentation:

- https://github.com/kubernetes/kubernetes/pull/104907

- https://github.com/kubernetes/enhancements/pull/1990

- https://github.com/cri-o/cri-o/pull/4199

- https://github.com/kubernetes/enhancements/tree/master/keps/sig-node/2008-forensic-container-checkpointing

- https://kubernetes.io/docs/reference/node/kubelet-checkpoint-api/

- https://podman.io/getting-started/checkpoint

- https://github.com/checkpoint-restore/criu

Also, here are some of Adrian Reber’s talks, the main contributor of these new features: