The Sysdig 2023 Cloud-Native Security and Container Usage Report has shed some light on how organizations are managing their cloud environments. Based on real-world customers, the report is a snapshot of the state of cloud-native in 2023, aggregating data from billions of containers.

Our report retrieves data from cloud projects in the following areas:

- Number of containers that are using fewer CPU and memory than needed.

- Number of containers with no CPU limits set.

- Overallocation and estimated losses.

Limits and requests

Over the year, we have covered the importance of Limits and Requests. Simply put, they provide a way of specifying the maximum and the guaranteed amount of a computing resource for a container, respectively.

But they are more than that – they also indicate your company’s intention for a particular process. They can define the eviction tier level and the Quality of Service for the Pods running those containers.

Our study shows that:

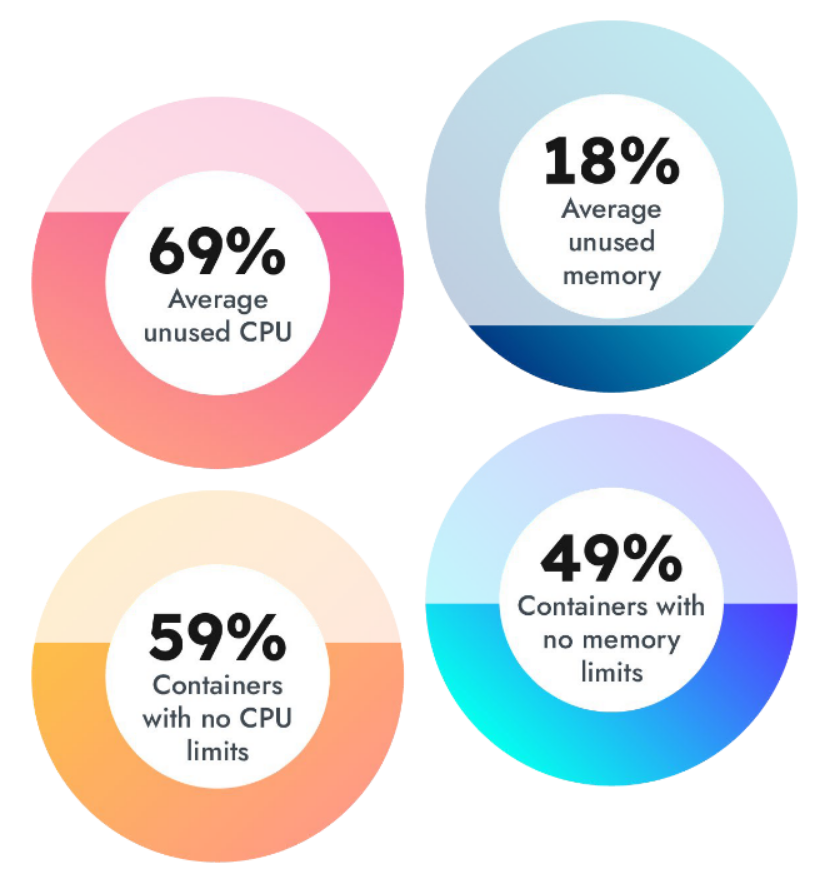

- 49% of containers have no memory limits set.

- 59% of containers have no CPU limits set.

While setting up memory limits might have negative side effects, it’s important to set CPU limits to avoid starvation in processes or particular containers having drastic spikes of CPU consumption.

59% containers with no CPU limits

Our study showed that 59% of containers had no CPU limits set at all. Normally, adding CPU limits might lead to Throttling, but the report shows as well that on average 69% of the purchased CPU was unused, suggesting that no capacity planning was in place.

49% containers with no memory limits

Almost half of the containers had no memory limits at all. This particular case is special, since adding a limit to memory might eventually cause OOM errors.

Kubernetes overallocation

Cloud providers give plenty of options to run applications with the ease of a click, which is a great way to kickstart the monitoring journey. However, cloud-native companies tend to allocate resources just to avoid becoming saturated, which can lead to astronomical costs.

Why does this happen?

- Urge to scale quickly

- Lack of resource consumption visibility

- Multi-tenant scaling

- Lack of Kubernetes knowledge

- Lack of capacity planning

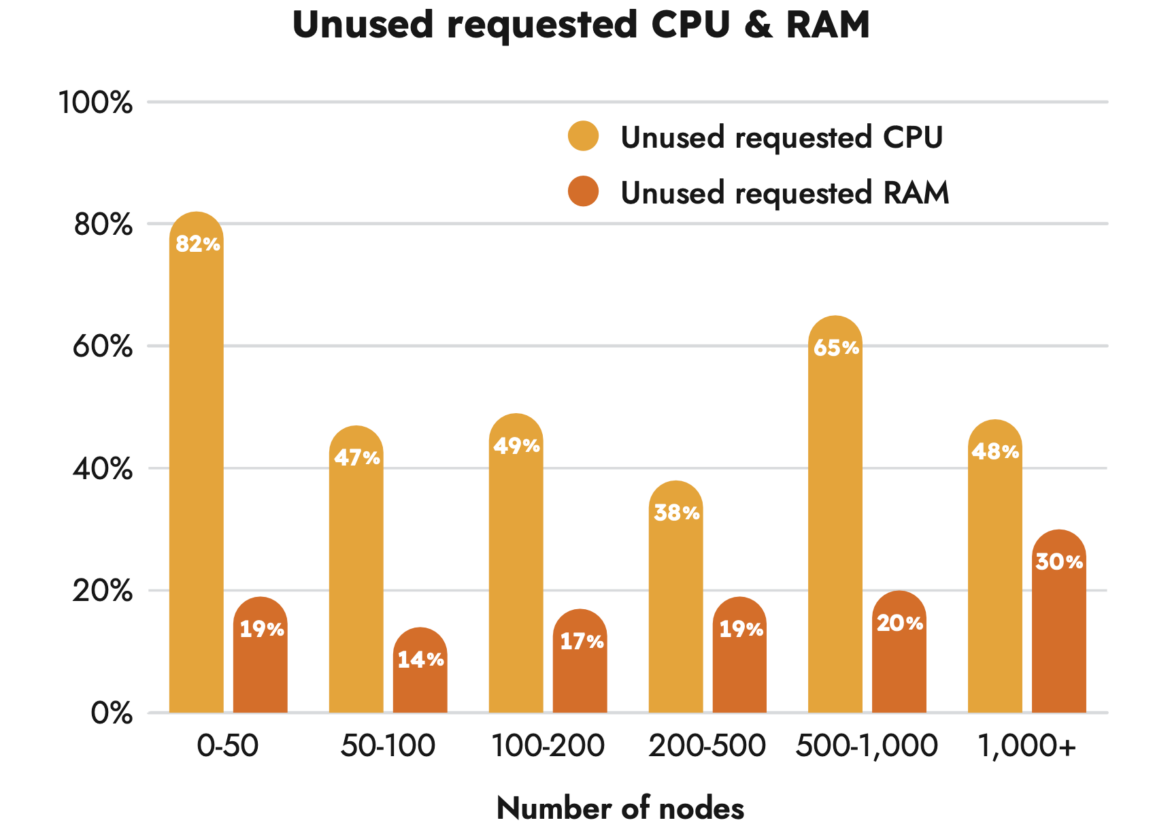

Since CPU is the most costly resource in a cloud instance, companies might be overspending on something they are never going to use.

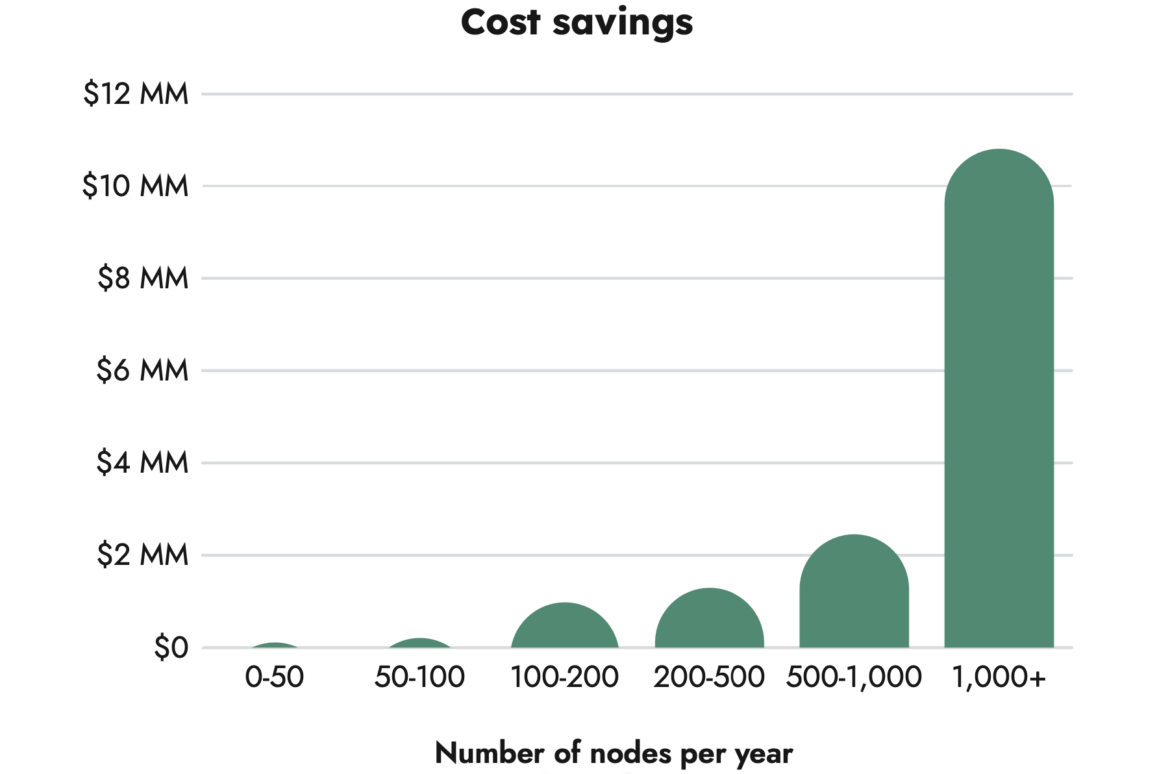

By using the average cost for AWS pricing on nodes based on CPU and memory, we can then calculate the average savings for companies that address these problems.

Specifically, our report showed that companies with more than 1,000 nodes could reduce their wasted resources by $10M per year.

CPU overcommitment

In case the limits set are higher than the actual CPU, Kubernetes nodes will display:

Allocated resources: (Total limits may be over 100 percent, i.e., overcommitted.)

This means that Kubernetes will throttle some processes to provide higher CPU utilization.

Cost reduction strategies

Capacity planning

By using applications to track resource usage and by performing capacity planning, companies can mitigate these costs with a clear investment/return net gain. Both Limits and Requests are useful tools that can be used to restrict the usage, but they can be cumbersome as they can lead to Pod eviction or over-commitment.

Limitranges are a useful tool to automatically assign a value range for both limits and requests for all containers within a namespace.

Autoscaling

Both vertical autoscaling (increasing the resource size on demand) and horizontal autoscaling (increasing or decreasing the amount of Pods based on utilization) can be used to dynamically adapt to the current needs of your cloud-native solution.

ResourceQuota

Companies with multi-tenant solutions might come up with the problem that some of their projects are more demanding than others in terms of resources. Because of this, assigning the same resources might eventually cause overspending.

That’s why you can use ResourceQuotas to set a maximum amount of a resource to be consumed for all processes in a namespace.

Conclusion

There has been rapid growth in the number of companies investing in cloud solutions in recent years.

But with great power comes great responsibility. Cloud projects might want to find a balance for resources like CPU or memory.

Generally, they want to allocate enough so they never have saturation problems. But, on the other hand, over-allocating will lead to massive spending on unused resources.

The solution? Capacity planning, autoscaling, and visibility into costs are the best tools to take back control over your cloud-native spendings.

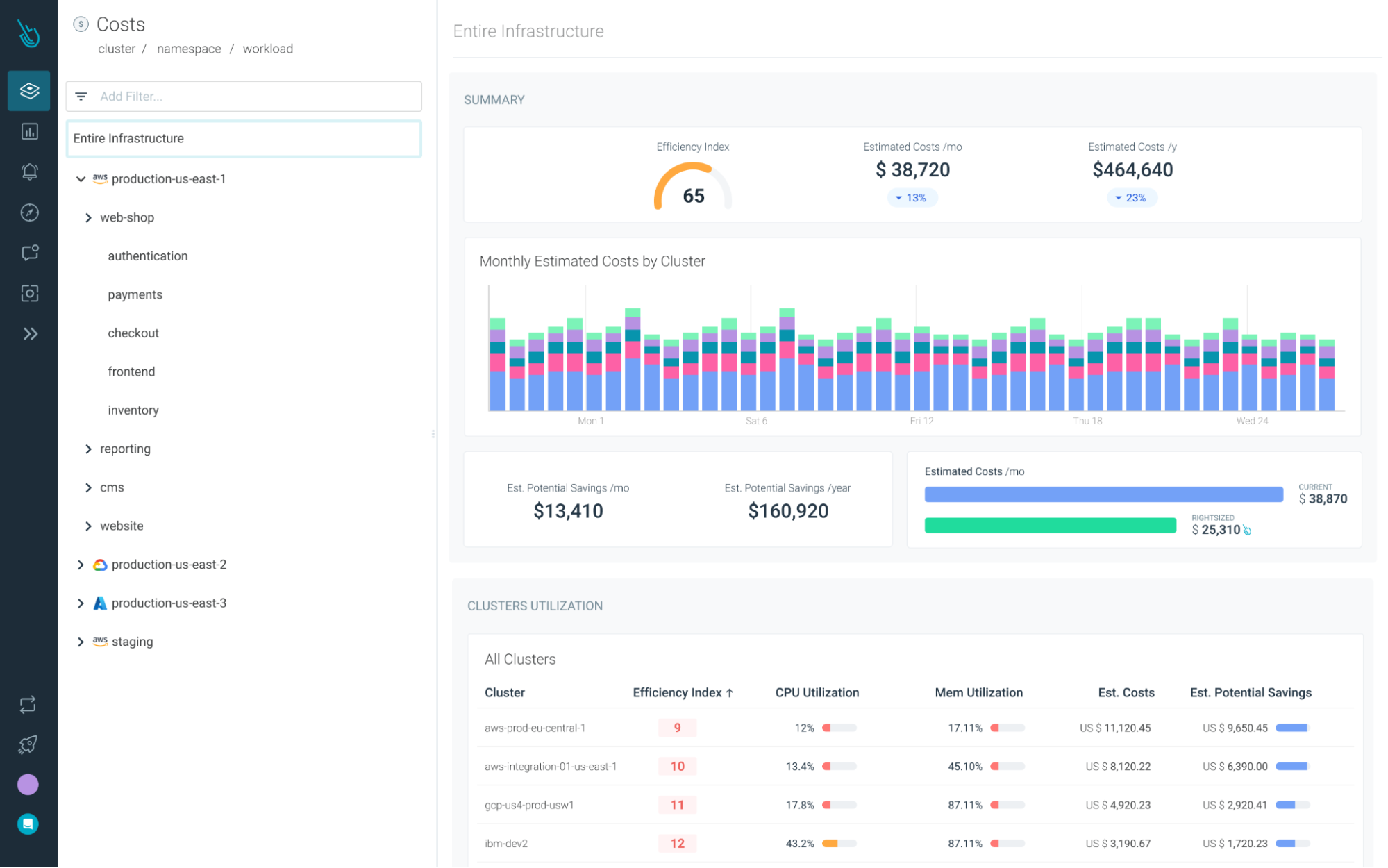

Reduce your Kubernetes costs with Sysdig Monitor

Sysdig Monitor can help you reach the next step in the Monitoring Journey.

With Cost Advisor, you can reduce Kubernetes resource waste by up to 40%.

And with our out-of-the-box Kubernetes Dashboards, you can discover underutilized resources in a couple of clicks.

Try it free for 30 days!